Apache Spark recently gained a lot of popularity, compared to other Big Data tools because of its updated and faster in-memory computation that processes data so much more efficiently. It is also preferred by many industry tycoons since Spark can implement Machine Learning tasks, SQL integration, streaming, and graph computation, on large Data Sets saving huge operational costs.

Companies like IBM and Huawei have invested a lot in Spark, foreseeing the significance of the general data processing engine in extending their Big Data products into fields of Machine Learning and interactive querying. With the same idea, several start-ups are rapidly building their basis of business on Spark.

The IT industry is rapidly recruiting programmers with additional know-how Spark along with other programming languages such as Java, Python, Scala, and R. There is currently an ever-growing need for application developers and data engineers with a knowledge of Spark to incorporate it into their programs.

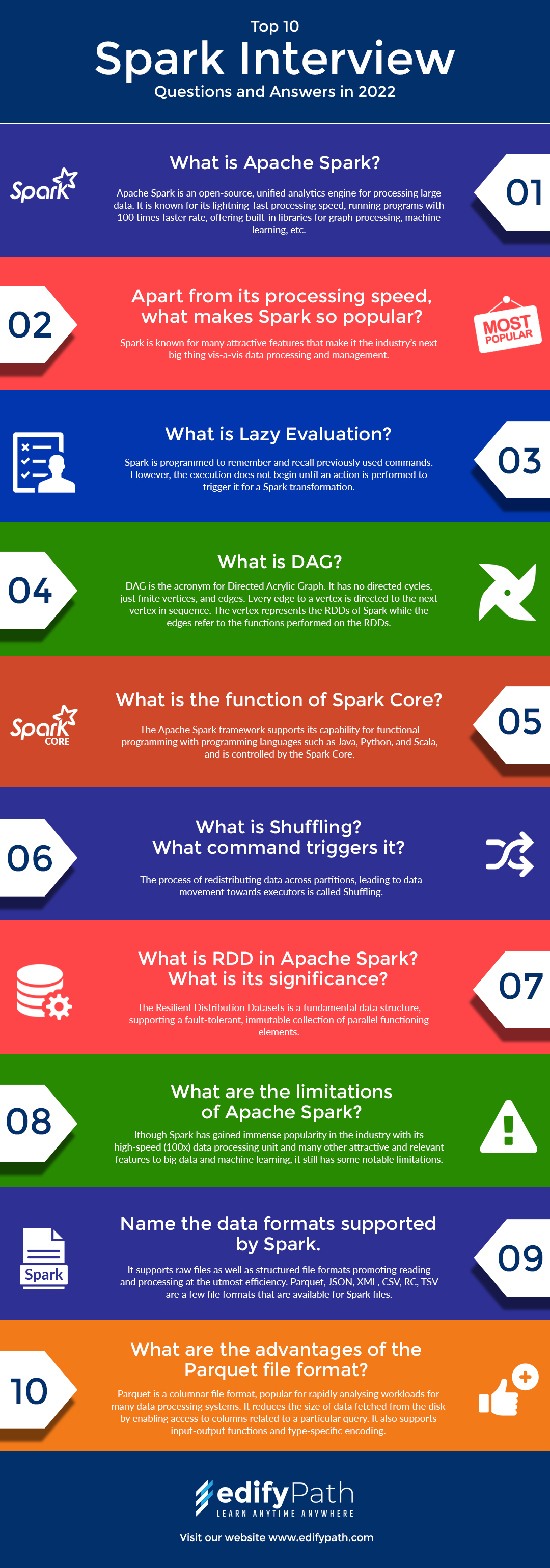

Here we bring, the top 10 Spark interview questions and answers for freshers, as well as experienced professionals to ace any Apache Spark Interview.

1. What is Apache Spark?

Apache Spark is an open-source, unified analytics engine for processing large data. It is known for its lightning-fast processing speed, running programs with 100 times faster rate, offering built-in libraries for graph processing, machine learning, etc. Users are provided with an option to run it on the cluster or stand-alone mode, to access various data sources like HBase, HDFS, etc.

The execution engine supports cyclic data flow and works with in-memory computation.

2. Apart from its processing speed, what makes Spark so popular?

Spark is known for many attractive features that make it the industry’s next big thing vis-a-vis data processing and management. Some of its innate features are:

a. In-memory Computation: The DAG execution engine allows the in-memory computation feature, and fetching data from external drives to process faster. The feature also supports data caching.

b. Reusability: Codes written in Spark have multiple usages in data streaming, ad-hoc queries, etc.

c. Supports multiple programming languages: Spark is integrated with many coding languages like Python, Scala, Java, and R. This provides the user with programming flexibility.

The multi-lingual flexibility also overcomes the older Hadoop limitation where users could only code using Java.

d. Cost Efficient: Being an open-source processing unit, Apache Spark is a comparatively better solution, than Hadoop because it does not need large storage data centres for processing.

e. Flexible integration: Spark also supports Hadoop YARN.

3. What is Lazy Evaluation?

Spark is programmed to remember and recall previously used commands. However, the execution does not begin until an action is performed to trigger it for a Spark transformation.

4. What is DAG?

DAG is the acronym for Directed Acrylic Graph. It has no directed cycles, just finite vertices, and edges. Every edge to a vertex is directed to the next vertex in sequence. The vertex represents the RDDs of Spark while the edges refer to the functions performed on the RDDs.

5. What is the function of Spark Core?

The Apache Spark framework supports its capability for functional programming with programming languages such as Java, Python, and Scala, and is controlled by the Spark Core.

6. What is Shuffling? What command triggers it?

The process of redistributing data across partitions, leading to data movement towards executors is called Shuffling.

Shuffling occurs when two tables are joined, or if the user is performing the ByKey functions- GroupByKey or ReduceByKey.

It has two compression domains-

1. Spark.shuffle.compress which checks the engine for compressed shuffle outputs and

2. Spark.shuffle.spill.compress which decides whether or not to compress transitioning shuffle spill files.

7. What is RDD in Apache Spark? What is its significance?

The Resilient Distribution Datasets is a fundamental data structure, supporting a fault-tolerant, immutable collection of parallel functioning elements.

Spark implements the concept of RDDs to achieve efficient MapReduce functions. The data is partitioned into two logical partitions, creating RDDs in two different ways:

● Parallelized Datasets: Collections/ libraries meant to function parallel to other operations.

● Hadoop Datasets: Datasets that perform functions on file record systems on storage systems like the HDFS and HBase.

8. What are the limitations of Apache Spark?

Although Spark has gained immense popularity in the industry with its high-speed (100x) data processing unit and many other attractive and relevant features to big data and machine learning, it still has some notable limitations.

They include:

- File Management System: Spark has no independent file management system. It mostly relies on other file systems like HDFS, cloud-based, or otherwise.

- Additional memory requirement: Even though Apache Spark is cost-effective in processing large data, storing the data in the memory is not. Running Spark requires a good amount of storage space which requires users to invest in extra RAM, whose cost is very high.

- Buffer and transferring Data: In Spark, it is impossible to transfer data until the buffer is empty. Additionally, when the buffer is full it stops receiving data, and thus handling the pressure of data has to be done manually.

9. Name the data formats supported by Spark.

It supports raw files as well as structured file formats promoting reading and processing at the utmost efficiency. Parquet, JSON, XML, CSV, RC, TSV are a few file formats that are available for Spark files.

10. What are the advantages of the Parquet file format?

Parquet is a columnar file format, popular for rapidly analysing workloads for many data processing systems.

It reduces the size of data fetched from the disk by enabling access to columns related to a particular query. It also supports input-output functions and type-specific encoding.

CONCLUSION

Apache Spark has become a lucrative technology in the field of Machine Learning and analysing Big Data. It is the fastest-growing computational platform that supports different libraries and large tools.

Along with changing the paradigm of data analysis, Spark is rapidly opening doors for programmers in various career fields such as health, finance, security, and E-commerce across the world.

©️ 2024 Edify Educational Services Pvt. Ltd. All rights reserved. | The logos used are the trademarks of respective universities and institutions.